BibTeX

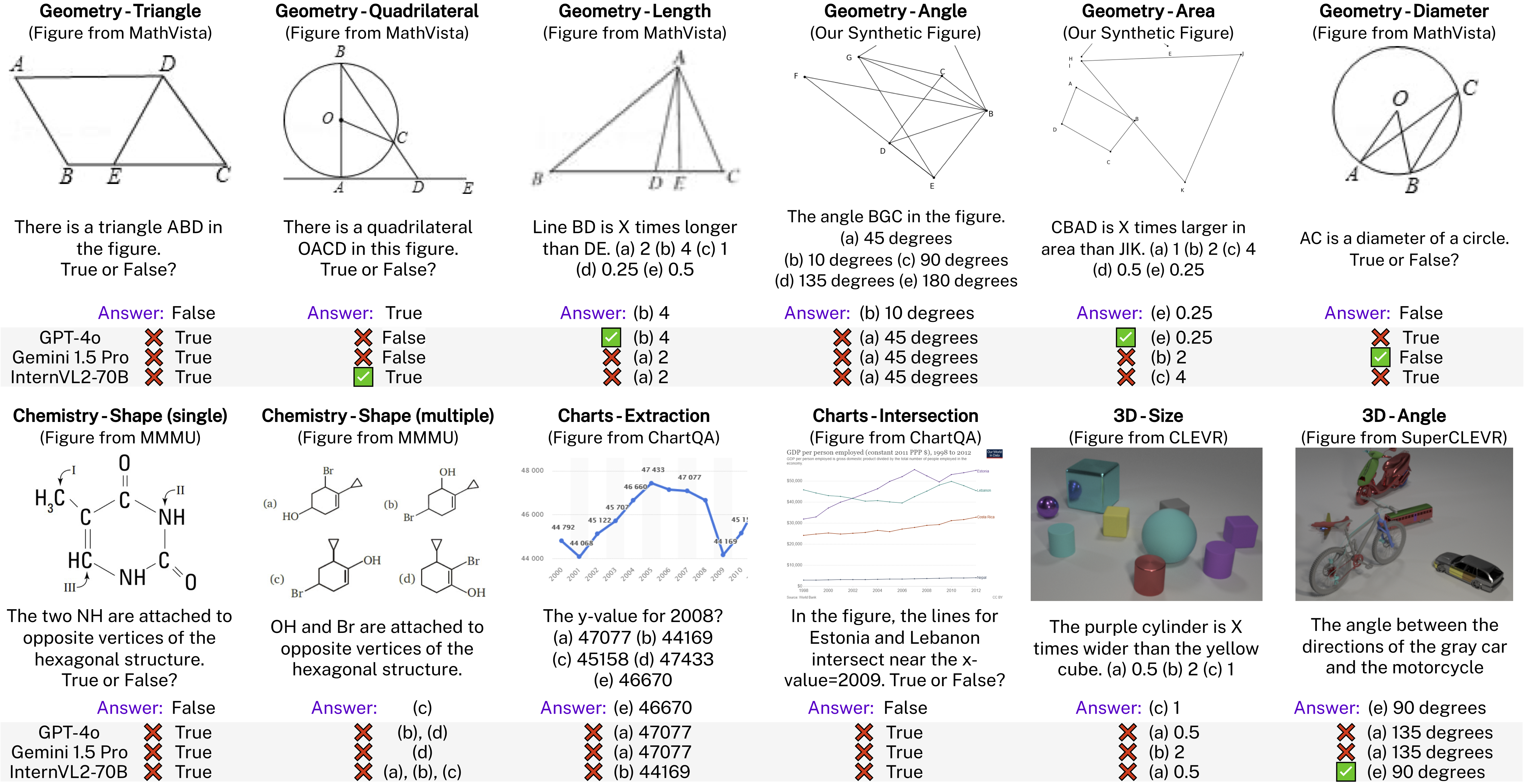

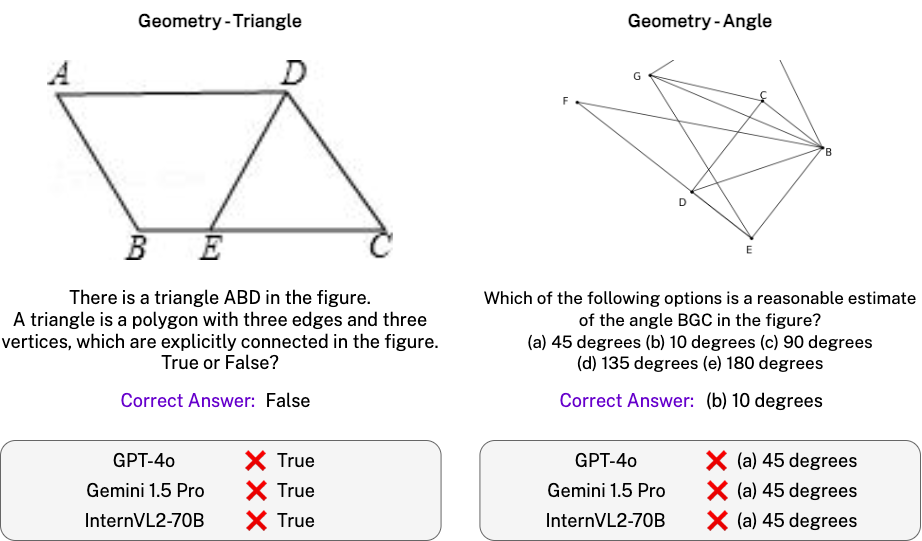

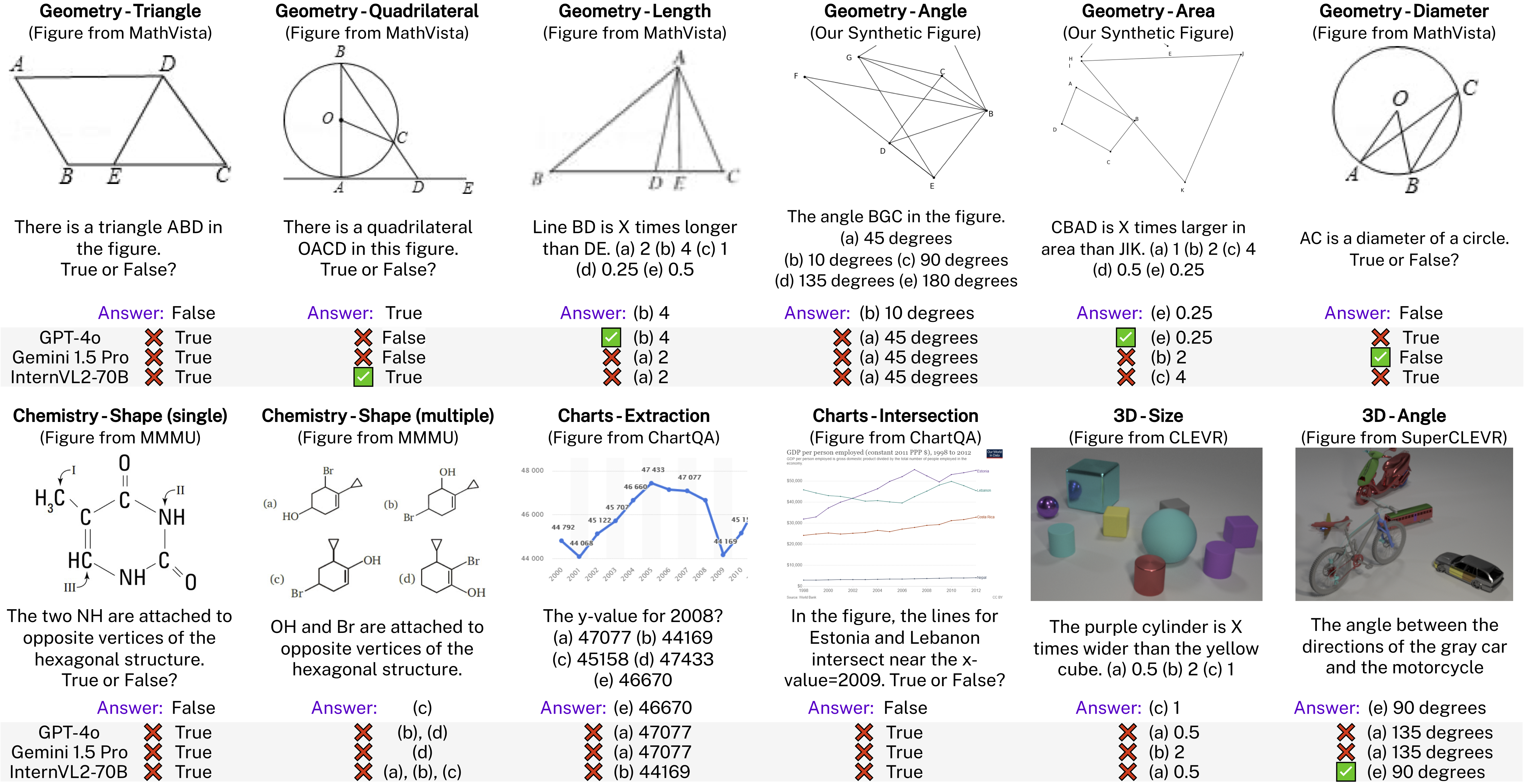

Examples from VisOnlyQA and answers from LVLMs. VisOnlyQA includes 12 tasks for evaluating the capability of LVLMs to perceive basic geometric infomration, such as angle, shape, and size. State-of-the-art LVLMs still often cannot accurately perceive geometric infomration. Questions in this figure are abbreviated.

Examples from VisOnlyQA and answers from LVLMs. VisOnlyQA includes 12 tasks for evaluating the capability of LVLMs to perceive basic geometric infomration, such as angle, shape, and size. State-of-the-art LVLMs still often cannot accurately perceive geometric infomration. Questions in this figure are abbreviated..

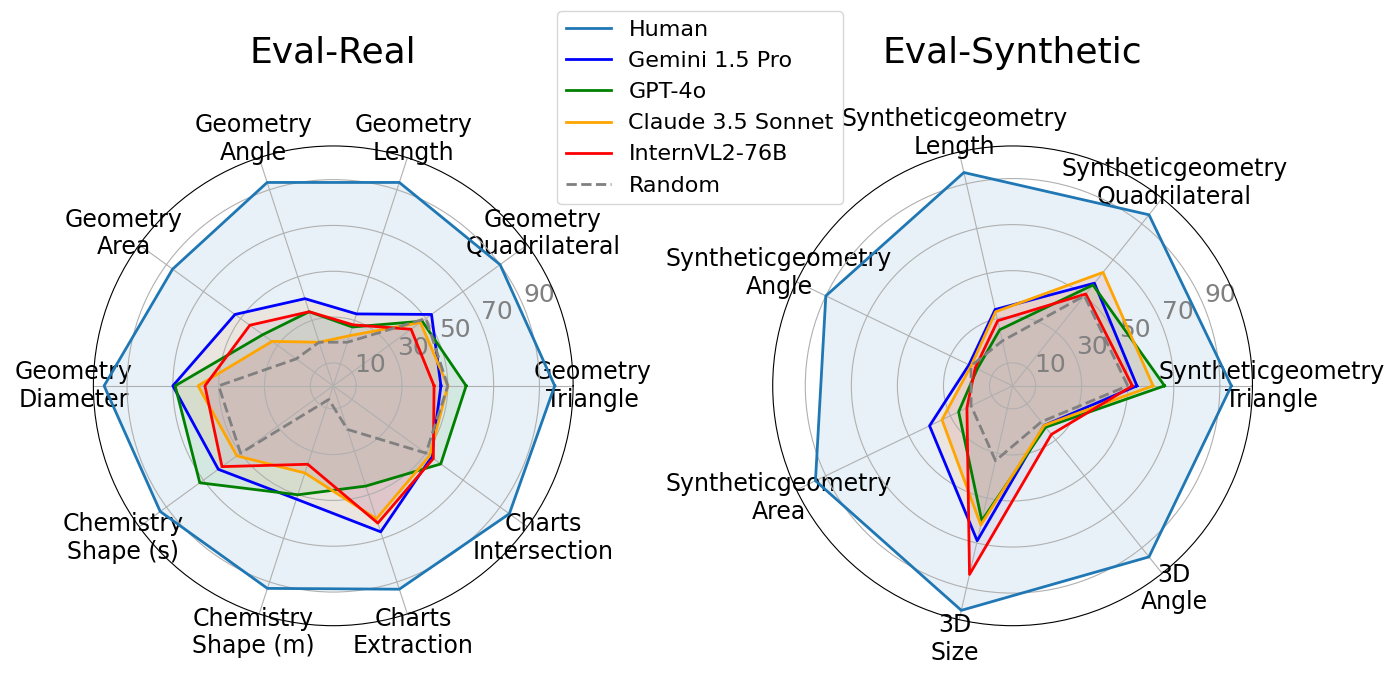

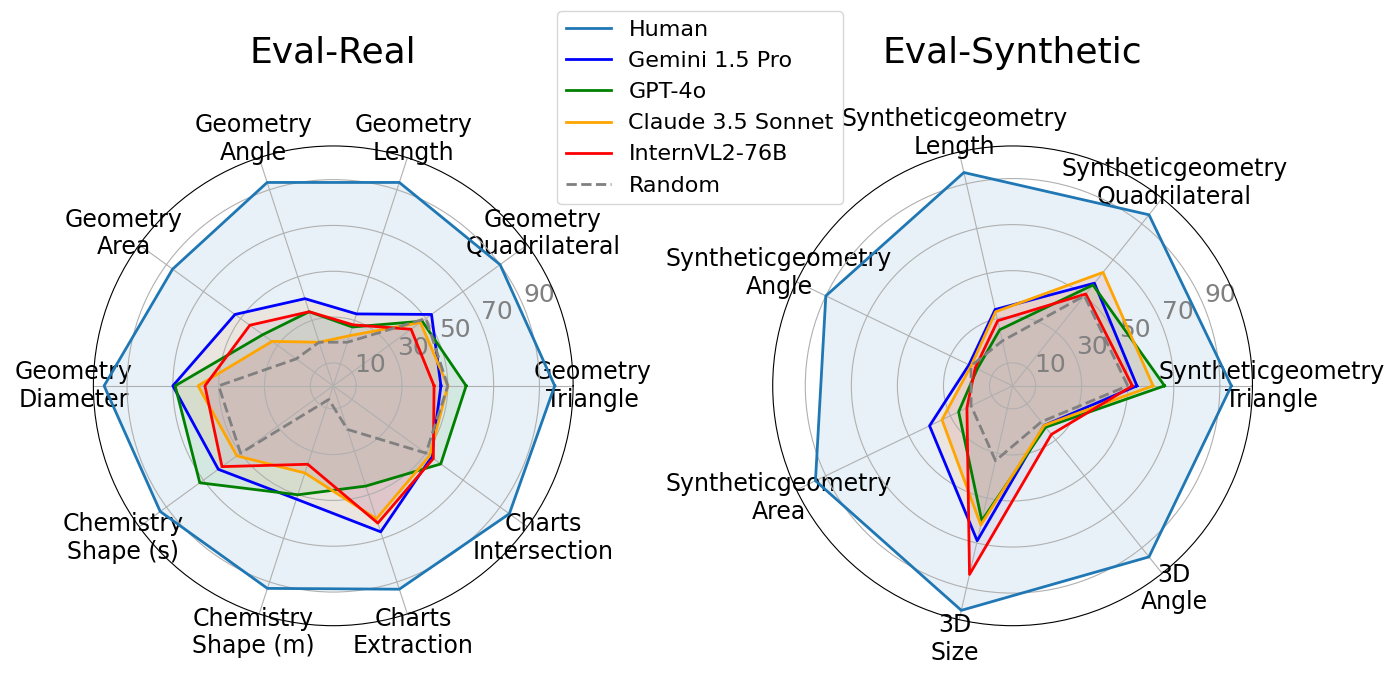

Accuracy scores of LVLMs and human on VisOnlyQA. Even state-of-the-art LVLMs, such as GPT-4o and Gemini 1.5 Pro, still struggle with visual perception of geometric information, and their performance is very far from human performance.

Large Vision Language Models (LVLMs) have demonstrated significant advancement across a range of vision-language tasks, including visual math reasoning and academic exams. However, there is a lack of analysis of the capability of LVLMs to perceive visual information in images. Specifically, it remains unclear how accurately LVLMs can perceive geometric information, such as shape, angle, and size, although the perception of these properties is crucial for tasks that require a detailed visual understanding.

To bridge this gap, we introduce VisOnlyQA, a dataset for evaluating the geometric perception of LVLMs, consisting of 12 tasks that ask about geometric information in geometric shapes, charts, chemical structures, and 3D shapes. Our experiments on VisOnlyQA reveal that LVLMs still often cannot accurately perceive basic geometric information in images.

Our experiments highlight the following findings:

More examples are provided below.

VisOnlyQA includes questions about geometric information in four types of figures: geometric shapes, chemical structures, charts, and 3D shaes. They are acquired from two types of sources: Real and Synthetic.

VisOnlyQA includes the following splits.

Examples from VisOnlyQA and answers from LVLMs. VisOnlyQA includes 12 tasks for evaluating the capability of LVLMs to perceive basic geometric infomration, such as angle, shape, and size. State-of-the-art LVLMs still often cannot accurately perceive geometric infomration. Questions in this figure are abbreviated.

| Geometry | Chemistry | Charts | 3D | Total | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Triangle | Quadrilateral | Length | Angle | Area | Diameter | Shape (s) | Shape (m) | Extraction | Intersection | Size | Angle | ||

| Eval-Real | 100 | 100 | 100 | 100 | 100 | 100 | 50 | 50 | 100 | 100 | -- | -- | 900 |

| Eval-Synthetic | 100 | 100 | 100 | 100 | 100 | -- | -- | -- | -- | -- | 100 | 100 | 700 |

| Train | 10k | 10k | 10k | 10k | 10k | -- | -- | -- | -- | -- | 10k | 10k | 70k |

| Answer Format | True/False | True/False | True/False | 5 options | 5 options | 5 options | True/False | Select Multiple | 5 options | True/False | 3 options | 5 options | |

Question:

There is a triangle DBC in the figure. True or False?

A triangle is a polygon with three edges and three vertices, which are explicitly connected in the figure.

Gold Answer: False

| Model | Answer w/ CoT | Answer w/o CoT | ||

|---|---|---|---|---|

| GPT-4o | In the figure, there is a triangle labeled \( \triangle ABC \). The line segment \( DE \) is parallel to \( BC \) and divides \( \triangle ABC \) into two smaller triangles, \( \triangle ADE \) and \( \triangle BDE \). However, there is no triangle labeled \( \triangle DBC \) in the figure. Final answer: False. |

✅ | False. | ✅ |

| Gemini 1.5 Pro | The figure shows points D, B, and C connected by line segments DB, BC, and CD. These three segments and the points D, B, and C form a triangle. Final Answer: True |

❌ | True | ❌ |

We evaluate 23 LVLMs, including GPT-4o and Gemini 2.5 Pro, on VisOnlyQA. Our results show that even state-of-the-art LVLMs still often cannot accurately perceive basic geometric information in images.

Accuracy scores of LVLMs and human on VisOnlyQA. Even state-of-the-art LVLMs, such as GPT-4o and Gemini 1.5 Pro, still struggle with visual perception of geometric information, and their performance is very far from human performance.

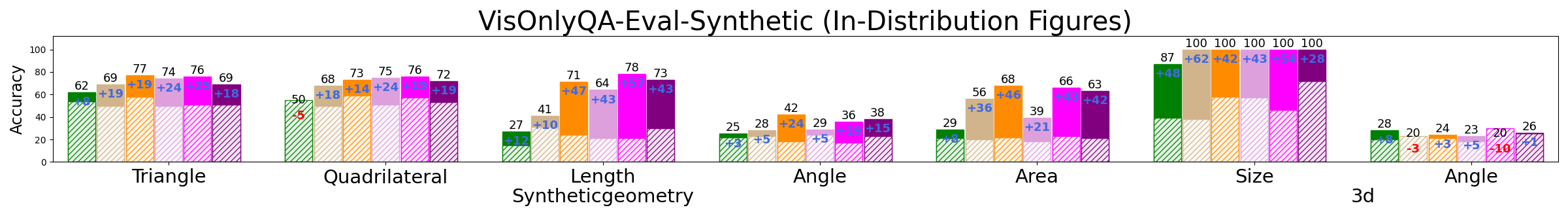

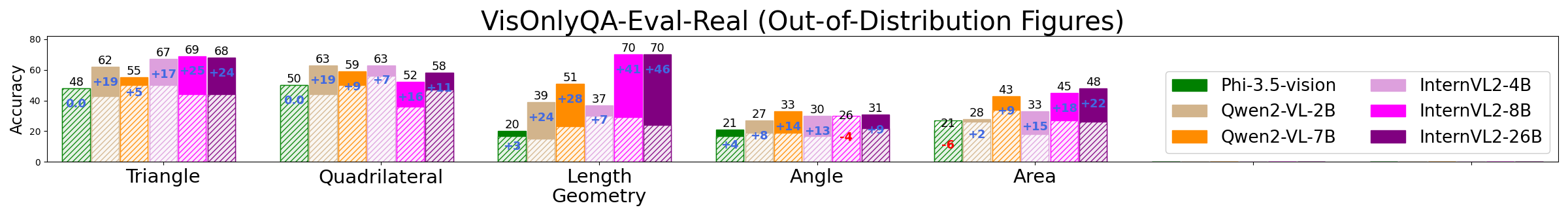

We evaluate LVLMs fine-tuned on the training set of VisOnlyQA.

Positive results:

All models achieve near-perfect performance in 3D-Size after fine-tuning, and models larger than 7B show large improvement even on the out-of-distribution figures in Geometry-Length and Area. This result partially supports our hypothesis that training data for existing LVLMs are insufficient and indicates that our approach of using synthetic training data has the potential to improve the capability of LVLMs to perceive geometric information.

Negative results:

However, fine-tuned models are still often much worse than human performance, even on in-distribution figures. Specifically, fine-tuning almost does not improve performance in 3D-Angle, and we observe relatively small improvements on Geometry-Triangle, Quadrilateral, and Angle, even on in-distribution figures. This result indicates that fine-tuning on datasets that require accurate perception of geometric information is not always effective, depending on the properties of target tasks.

Accuracy scores of LVLMs fine-tuned on the training set of VisOnlyQA.

InternVL2 4B and 8B and Qwen2-VL 2B and 7B use the same vision transformers (ViT) as visual encoders while using different language models, respectively. We expected the visual encoders to play a major role in geometric perception, and models using the same ViT to perform similarly on VisOnlyQA, particularly after fine-tuning — since fine-tuning would help models understand tasks, further reducing the impact of the reasoning capability of language models.

However, there are performance gaps between LVLMs with the same ViT and different language models, and the gaps become larger after fine-tuning. This observation indicates that language models of LVLMs affect the capability to perceive geometric information, and the influence of LLMs of LVLMs is not limited to reasoning or knowledge.

This result suggests that language models play a crucial role in processing visual information encoded by ViT, and strong language models are needed even for tasks that do not require challenging reasoning or knowledge.

Larger language models improve the performance of LVLMs on VisOnlyQAEval when using the same visual encoders.

| Model | ViT | LLM | Original | Fine-tuned | ||

|---|---|---|---|---|---|---|

| Real | Synthetic | Real | Synthetic | |||

| InternVL2-4B | 304M | 3.8B | 38.4 | 34.1 | 46.0 | 57.7 |

| InternVL2-8B | 304M | 7.7B | 40.7 | 35.0 | 52.4 | 64.6 |

| Qwen2-VL-2B | 675M | 1.5B | 32.3 | 33.6 | 43.8 | 54.6 |

| Qwen2-VL-7B | 675M | 7.6B | 38.9 | 37.1 | 48.2 | 65.0 |

Accuracy scores on the Eval-Real subset (900 examples in total) of VisOnlyQA.

| Model | Triangle | Quadrilateral | Diameter | Length | Angle | Area | Shape (s) | Shape (m) | Extraction | Intersection | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Random | 50.0 | 50.0 | 20.0 | 20.0 | 20.0 | 50.0 | 50.0 | 6.2 | 20.0 | 50.0 | 34.2 |

| Phi-3.5-vision | 48.0 | 50.0 | 17.0 | 17.0 | 27.0 | 50.0 | 54.0 | 10.0 | 29.0 | 50.0 | 35.6 |

| LLaVA-Next 8B | 50.0 | 50.0 | 16.0 | 15.0 | 26.0 | 49.0 | 42.0 | 4.0 | 22.0 | 49.0 | 33.3 |

| LLaVA-Next 34B | 49.0 | 50.0 | 30.0 | 15.0 | 22.0 | 44.0 | 34.0 | 10.0 | 35.0 | 50.0 | 35.2 |

| Llama 3.2 11B | 50.0 | 47.0 | 17.0 | 15.0 | 26.0 | 43.0 | 34.0 | 8.0 | 32.0 | 50.0 | 33.4 |

| Llama 3.2 90B | 51.0 | 46.0 | 14.0 | 28.0 | 27.0 | 48.0 | 60.0 | 20.0 | 35.0 | 45.0 | 37.1 |

| MolMo 7B-D | 49.0 | 45.0 | 20.0 | 11.0 | 23.0 | 56.0 | 40.0 | 12.0 | 31.0 | 48.0 | 34.3 |

| MolMo 72B | 44.0 | 47.0 | 22.0 | 25.0 | 33.0 | 50.0 | 48.0 | 30.0 | 46.0 | 52.0 | 39.8 |

| Qwen2-VL-2B | 43.0 | 44.0 | 15.0 | 19.0 | 26.0 | 47.0 | 38.0 | 12.0 | 27.0 | 45.0 | 32.3 |

| Qwen2-VL-7B | 50.0 | 50.0 | 23.0 | 19.0 | 34.0 | 46.0 | 46.0 | 16.0 | 45.0 | 52.0 | 38.9 |

| Qwen2-VL-72B | 44.0 | 52.0 | 27.0 | 27.0 | 37.0 | 61.0 | 56.0 | 36.0 | 53.0 | 53.0 | 44.4 |

| InternVL2-4B | 50.0 | 56.0 | 30.0 | 17.0 | 18.0 | 49.0 | 54.0 | 16.0 | 38.0 | 53.0 | 38.4 |

| InternVL2-8B | 44.0 | 36.0 | 29.0 | 30.0 | 27.0 | 56.0 | 50.0 | 22.0 | 52.0 | 56.0 | 40.7 |

| InternVL2-26B | 44.0 | 47.0 | 24.0 | 22.0 | 26.0 | 55.0 | 58.0 | 28.0 | 47.0 | 46.0 | 39.3 |

| InternVL2-40B | 43.0 | 45.0 | 32.0 | 23.0 | 31.0 | 57.0 | 28.0 | 30.0 | 61.0 | 58.0 | 42.1 |

| InternVL2-76B | 44.0 | 42.0 | 28.0 | 34.0 | 45.0 | 56.0 | 60.0 | 36.0 | 63.0 | 54.0 | 46.0 |

| Claude 3.5 Sonnet | 50.0 | 47.0 | 23.0 | 20.0 | 33.0 | 59.0 | 52.0 | 40.0 | 61.0 | 52.0 | 43.4 |

| Claude Sonnet 4 | 38.0 | 57.0 | 32.0 | 25.0 | 33.0 | 66.0 | 72.0 | 44.0 | 70.0 | 54.0 | 48.1 |

| Claude Opus 4 | 41.0 | 47.0 | 35.0 | 34.0 | 36.0 | 60.0 | 72.0 | 50.0 | 80.0 | 50.0 | 49.3 |

| GPT-4o-mini | 45.0 | 66.0 | 26.0 | 19.0 | 30.0 | 58.0 | 58.0 | 32.0 | 40.0 | 53.0 | 42.4 |

| GPT-4o | 58.0 | 48.0 | 27.0 | 34.0 | 38.0 | 69.0 | 72.0 | 50.0 | 46.0 | 58.0 | 48.8 |

| Gemini 1.5 Flash | 47.0 | 51.0 | 25.0 | 24.0 | 39.0 | 60.0 | 68.0 | 42.0 | 58.0 | 58.0 | 49.2 |

| Gemini 1.5 Pro | 47.0 | 53.0 | 33.0 | 40.0 | 53.0 | 70.0 | 62.0 | 52.0 | 67.0 | 53.0 | 52.6 |

| Gemini 2.5 Pro | 66.0 | 52.0 | 55.0 | 59.0 | 56.0 | 90.0 | 92.0 | 88.0 | 86.0 | 72.0 | 79.0 |

| Human | 96.7 | 90.0 | 93.3 | 93.3 | 86.7 | 100.0 | 93.3 | 93.0 | 93.3 | 95.0 | 93.5 |

Accuracy scores on the Eval-Synthetic subset (700 examples in total) of VisOnlyQA.

| Model | Triangle | Quadrilateral | Length | Angle | Area | Size | Angle (3D) | Average |

|---|---|---|---|---|---|---|---|---|

| Random | 50.0 | 50.0 | 20.0 | 20.0 | 20.0 | 33.3 | 20.0 | 30.5 |

| Phi-3.5-vision | 54.0 | 55.0 | 15.0 | 22.0 | 21.0 | 39.0 | 20.0 | 32.3 |

| LLaVA-Next 8B | 50.0 | 50.0 | 17.0 | 21.0 | 19.0 | 26.0 | 19.0 | 28.9 |

| LLaVA-Next 34B | 51.0 | 50.0 | 25.0 | 24.0 | 20.0 | 48.0 | 32.0 | 35.7 |

| Llama 3.2 11B | 54.0 | 52.0 | 31.0 | 21.0 | 21.0 | 32.0 | 21.0 | 33.1 |

| Llama 3.2 90B | 61.0 | 56.0 | 12.0 | 16.0 | 20.0 | 45.0 | 26.0 | 33.7 |

| MolMo 7B-D | 49.0 | 56.0 | 22.0 | 20.0 | 14.0 | 29.0 | 27.0 | 31.0 |

| MolMo 72B | 51.0 | 55.0 | 23.0 | 22.0 | 18.0 | 50.0 | 27.0 | 35.1 |

| Qwen2-VL-2B | 50.0 | 50.0 | 31.0 | 23.0 | 20.0 | 38.0 | 23.0 | 33.6 |

| Qwen2-VL-7B | 58.0 | 59.0 | 24.0 | 18.0 | 22.0 | 58.0 | 21.0 | 37.1 |

| Qwen2-VL-72B | 51.0 | 56.0 | 33.0 | 21.0 | 26.0 | 76.0 | 27.0 | 41.4 |

| InternVL2-4B | 50.0 | 51.0 | 21.0 | 24.0 | 18.0 | 57.0 | 18.0 | 34.1 |

| InternVL2-8B | 51.0 | 57.0 | 21.0 | 17.0 | 23.0 | 46.0 | 30.0 | 35.0 |

| InternVL2-26B | 51.0 | 53.0 | 30.0 | 23.0 | 21.0 | 72.0 | 25.0 | 39.3 |

| InternVL2-40B | 51.0 | 54.0 | 30.0 | 23.0 | 21.0 | 69.0 | 25.0 | 39.0 |

| InternVL2-76B | 52.0 | 51.0 | 29.0 | 18.0 | 22.0 | 84.0 | 27.0 | 40.4 |

| Claude 3.5 Sonnet | 61.0 | 63.0 | 33.0 | 20.0 | 34.0 | 62.0 | 22.0 | 42.1 |

| Claude Sonnet 4 | 38.0 | 57.0 | 32.0 | 25.0 | 33.0 | 54.0 | 25.0 | 37.7 |

| Claude Opus 4 | 41.0 | 47.0 | 35.0 | 34.0 | 36.0 | 50.0 | 34.0 | 39.6 |

| GPT-4o-mini | 60.0 | 51.0 | 21.0 | 20.0 | 18.0 | 27.0 | 23.0 | 31.4 |

| GPT-4o | 66.0 | 56.0 | 25.0 | 17.0 | 26.0 | 60.0 | 23.0 | 39.0 |

| Gemini 1.5 Flash | 54.0 | 51.0 | 29.0 | 21.0 | 19.0 | 60.0 | 21.0 | 36.4 |

| Gemini 1.5 Pro | 54.0 | 57.0 | 34.0 | 21.0 | 40.0 | 69.0 | 22.0 | 42.4 |

| Gemini 2.5 Pro | 66.0 | 52.0 | 55.0 | 59.0 | 56.0 | 72.0 | 59.0 | 59.9 |

| Human | 95.0 | 95.0 | 95.0 | 90.0 | 95.0 | 100.0 | 95.0 | 95.0 |